EEG Visualiser (Brainrave)

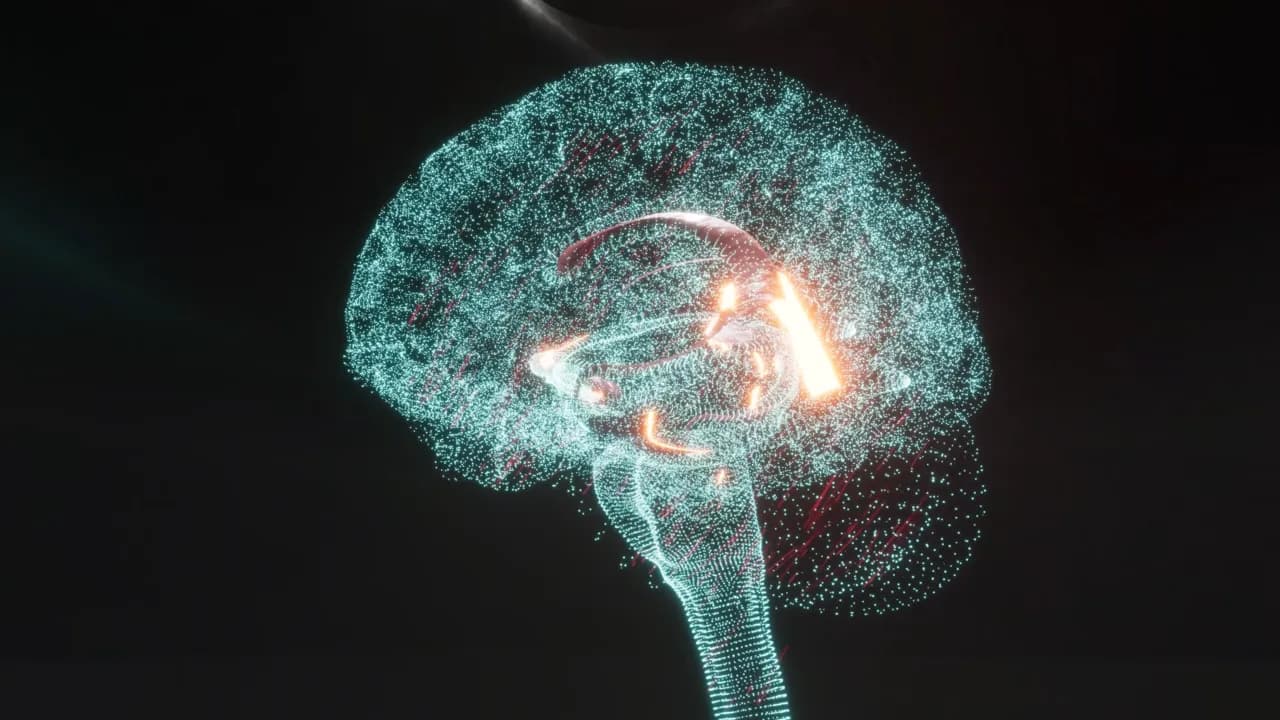

A Unity-powered system that transforms EEG signals and live audio into reactive VFX. Blending neuroscience and entertainment, Brainrave turns brain activity into a live visual performance.

EEG Visualiser (Brainrave)

As Co-Founder of Brainrave, I helped design a system that blends neuroscience and immersive entertainment.

It captures live EEG data and audio, translating them into reactive Unity visuals. Shaders, particles, and 3D brain models that move and morph in time with both music and thought.

Overview

At its heart, Brainrave is about taking invisible signals. Brainwaves and beats. And making them visible.

EEG data flows in through BrainFlow, while a custom Rust module analyses live audio streams. Both are routed into Unity where an adaptive VFX system ties neural states and music together into one synchronised performance.

How It Works

- EEG Integration – Brain signals streamed with BrainFlow, processed in Python for smoothing, band-pass filtering, and normalisation.

- Rust Beat Detection – Real-time rhythm tracking with millisecond accuracy, piped over UDP.

- Unity Visual Layer – EEG and beat signals drive particle systems, shader morphing, colour shifts, and camera motion.

- Adaptive VFX System – Modular adapter framework makes it easy to plug in new behaviours, from subtle waveforms to full 3D transformations.

Why It Matters

This isn’t just visuals for visuals’ sake. Brainrave explores what happens when a performer’s inner state becomes part of the show.

By turning neural data into shared art, it connects audiences with the emotion and energy of live music in ways that feel both novel and surprisingly human.

Key Features

- Real-time EEG parameter mapping

- Rust-based tempo & beat detection

- UDP bridge between neuroscience layer and Unity runtime

- Shader, particle, and mesh deformation linked to EEG and audio

- Modular adapter system for rapid creative expansion

Future Potential

Brainrave started as an MVP, but its roadmap reaches further:

- AI-based EEG forecasting to predict neural states in advance

- Smart-home neurointegration, syncing lighting or environments to mood

- Holographic displays for immersive installations at scale

The EEG Visualiser is just the first step. A proof of concept that shows how technology, music, and the mind can merge into something performative and communal. For me, it’s not just a technical challenge, but a glimpse of how entertainment might feel when our thoughts are part of the stage.

Highlights

EEG Integration

EEG signals streamed via BrainFlow, processed in Python for smoothing, filtering, and real-time normalisation.

Rust Beat Detection

Custom Rust module detects beats and audio onsets with millisecond accuracy, synchronised with Unity over UDP.

Adaptive Visual FX

Unity VFX layer with shaders, particles, and mesh deformation driven by modular adapters for rapid creative iteration.

Technology Stack

Inspired by This Project?

Let's work together to create something equally amazing for your next Unity project. Whether it's VR, EEG visualization, or game development, I'm here to help.